Adventures with eBPF and Prometheus

A story about understanding how eBF and Prometheus can play together to give useful and otherwise hard to obtain insights.

Recently I have been learning more about Linux systems internals and stumbled upon Brendan Greggs work using the extended Berkeley Packet Filter (eBPF) system in Linux kernels to perform various system introspection tasks. As part of my work at Sitewards I’ve been instrumenting many systems with the open source monitoring tooling Prometheus, and the primitives used in the eBPF filtering (counts, gauges and histograms) seemed a good fit for the use cases that eBPF is also being used for. So, it seems reasonable then to dig in and see whether we can plug this eBPF tooling into our Prometheus stacks to get otherwise bespoke data.

eBPF

The original BPF

eBPF has its origins in BPF, or Berkley Packet Filter. Originally proposed in 1992 by Steven McCanne and Van Jacobson the BPF is a raw interface to the “data link” layer of a network device, designed to filter packets sent to that network device. A common example of BFP implementation is a TCPDump expression. Given the expression:

tcpdump 'host 192.168.0.1'the BPF bytecode looks like:

# Using the `-d` switch dumps the BPF output in an

# "assembly-like" way

#

# tcpdump 'host 192.168.0.1' -d(000) ldh [12]

(001) jeq #0x800 jt 2 jf 6

(002) ld [26]

(003) jeq #0xc0a80001 jt 12 jf 4

(004) ld [30]

(005) jeq #0xc0a80001 jt 12 jf 13

(006) jeq #0x806 jt 8 jf 7

(007) jeq #0x8035 jt 8 jf 13

(008) ld [28]

(009) jeq #0xc0a80001 jt 12 jf 10

(010) ld [38]

(011) jeq #0xc0a80001 jt 12 jf 13

(012) ret #262144

(013) ret #0

Cloudflare has an excellent post describing reading this byte code in depth, but for our purposes it suffices to say that BPF can be used to filter packets at a super early stage in processing across a network device.

The successor, eBPF

Over time, some of the original design properties of BPF were rendered ineffective by the move to modern processors with 64 bit registers and the new instructions that accompanied them. Alexei Starovoitov introduced the eBPF design to take advantage of modern hardware with a new virtual machine that more closely matched contemporary processors.

This new eBPF design was up to 4 times faster than the (now classic BPF or “cBPF”) and many different architectures supported the JIT compiler. Originally, eBPF was only used by the kernel and cBPF programs transcoded to their eBPF counterparts, but in 2014 Alexei exposed them to userland.

This new eBPF can be run not only to process packets, but at a number of defined points in the Linux kernel. It’s possible to use this eBPF framework for:

- Modifying the settings of a network socket

- Restricting program control with seccomp-bpf

- Analysing kernel and userland behaviour with tracepoints, uprobes, kprobes and perf events

A suite of tools is available now to make this complex system available to systems administrators via the IOVisor project. It’s even started to be packaged for Debian with Buster!

Practically, eBPF allows us to run performant analysis on processes operating in both the kernel and userland without modifying that process directly, but by using already defined analysis utilities.

Prometheus

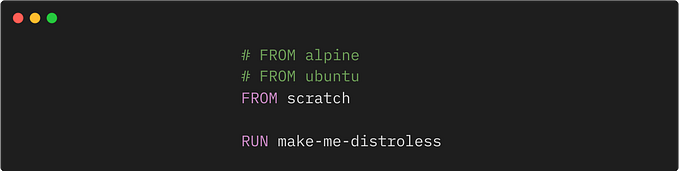

Prometheus is a time series database owned by the Cloud Native Computing Foundation. It’s written in golang and trivially easy to deploy. Indeed, it’s packaged by many distributions directly now, and can be as simple as:

$ apt-get install prometheus-serverPerhaps most attractively in our case, it is trivial to get metrics from other, third party solutions into Prometheus. Prometheus expects a wire format in plain text that looks something like:

# HELP process_virtual_memory_bytes Virtual memory size in bytes.

# TYPE process_virtual_memory_bytes gauge

process_virtual_memory_bytes 1.434054656e+09This should be exposed over a HTTP/S server which Prometheus can be configured to scrape on a regular interval. This is stored and can be expressed as a graph in the Prometheus server:

Prometheus has many third party integrations, and some applications are instrumented directly to expose metrics in the Prometheus format. Additionally, this format is being standardised as “Open Metrics”, and is well supported by a number of other time series database or monitoring tooling.

Practically, Prometheus is an excellent time series database and monitoring tool. Even if it’s not used in production, it provides a mechanism to prototype and understand this sort of instrumentation.

The eBPF exporter

Prometheus’s third party integrations are called “exporters”. Broadly, they’re small binaries that query applications in their own wire format or other direct instrumentation and expose information found in that format in the prometheus format. There are many different exporters, such as:

and many others. It’s rare that we’ll need to create one.

Initially the plan as part of this post was to write a new exporter for eBPF. However, luckily for us (though perhaps unluckily for my learning) Cloudflare have already published such an exporter. Further, it is already nicely packaged in an (under development) Ansible role by Cloud Alchemy.

Getting up and running

Unfortunately, neither are particularly stable just yet. At the time of writing the Ansible role doesn’t work in major ways, and the exporter doesn’t have compiled releases available via the releases section* as is customary for other exporters. Additionally, it’s not trivial to use the promu utility to package the exporter as it appears to be difficult to compile the bcc library for static use. So, for now it’s a straight forward:

$ cd $(mktemp -d)

$ GOPATH=$(pwd) go get -v github.com/cloudflare/ebpf_exporter/...Followed by

$ ./bin/ebpf_exporter --config.file=src/github.com/cloudflare/ebpf_exporter/examples/bio.yamlto get the biolatency example up and running. Drop some quick and dirty configuration into /etc/prometheus/prometheus.yml :

scrape_configs:

- job_name: "eBPF"

static_configs:

- targets:

- "localhost:9435"And we get a pretty graph!

To generate an increased load on the disk we can drop the system disk cache:

$ free && sync && echo 3 > /proc/sys/vm/drop_caches && freeAnd we see our disk read spike:

Note, in the above images prometheus has renormalised the graphs. So, although it’s the same data, it’s at a different scale; the aforementioned being capped on the Y axis at “8” samples, the latter being “150”. In this case because the data is a histogram the interesting part is how many of those large numbers are associated with high latency buckets, and how spread those bars are. Understanding the data is a story for another day; for now, I’m mostly keen on simply ingesting it.

More interesting samples

I haven’t debugged a super large amount of issues where I’m worried about block latency. However, the number of maxed out CPUs I’ve debugged is beyond a doubt.

It is perhaps interesting to know when debugging what’s eating CPU time whether that the CPU caches are being used effectively. The Cloudflare exporter supplies such an example:

$ sudo ./bin/ebpf_exporter --debug --config.file=src/github.com/cloudflare/ebpf_exporter/examples/llcstat.yamlThis allows us to track how many “last level” CPU caches were requested versus those that were missed and that went to main memory. This might provide a hint that the workload is not optimised correctly, and could be restructured to take better advantage of the CPU cache system on this machine.

Dropping this configuration gives the graph:

Which tracks the cache misses over time. However, it’s hard to tell what’s a “good” or “bad” cache hit ratio absent the history and user facing metrics to correlate it against. However, with different workloads it proves more interesting. While writing this post, I was subjected two sets of CPU time, one of which was less expected than the other:

- The tool

stress, designed to stress test the system - My Gnome display manager crashing

Given the graph below:

One might naively assume that stress would produce more CPU cache misses. However, just the opposite seemed to happen. Stress bottomed out the CPU cache; I guess due to the nature of the repetitive workload — it’s simply repeating an instruction set. However, when Gnome crashed that’s the spike. It’s easy to spot, as I’m running the exporter within the gnome desktop session, and the data is missing while the session died:

So, one could conclude that CPU cache is not directly related to CPU time, but might be related to performance in some capacity? It would take other metrics to be able to make this decision.

In Conclusion

eBPF provides an interesting tool that allows us to collect data that is otherwise not available in /proc or other static system representations. The existing work done by Cloudflare has made it much easier to import these samples into Prometheus, and while further work might be required to tune these eBPF programs to capture the required custom content, the future looks bright.

References

- Brendan Gregg's eBPF summary

- Brendan Gregg ‘s “CPU utilisation is wrong”

- Cloudflare on the “forgotten bytecode”

- eBPF in Kubernetes

- Matt Fleming with a thorough introduction to eBPF

Updates

- * There are now precompiled binaries! Thanks Ivan!!

Thanks

- Ivan Babrou for writing the exporter and reviewing this post.

- Brendan Gregg for doing the initial work to surface this knowledge

- CloudFlare for doing the technical writing and description to make this work understandable